I was working on a simple mesh in Unity in Blender 2.65a and I could not for the life of me remember how to show normals (to find some flipped normals that were causing faces to show improperly). Some spots say that the normals are displayable from the N-Key panel in the 3D view, but I thought a more concrete bit of information in order.

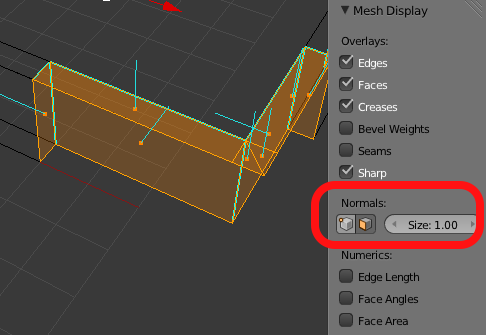

First, the normals are only displayed in edit mode. While in edit mode, if you mouse over the 3D viewport and then press the N-Key, you should see buttons appear in the N-Key panel under the Mesh Display category. Pressing one of those buttons will either show vertex normals or face normals, respectively.

Nice thing about Unity is how it updates; I love that saving over an old FBX file will replace every instance of the mesh in scene prefabs, provided you haven’t done anything wacky. One save and all the normals get set to where they need to be. Not to mention, the Unity 4.0 interface upgrades are a great addition!